3. Modeling Probability of Default

Modeling the probability that a loan defaults is critical to our investment strategy. After our exploration of 2013 and 2014 data, we select predictors that present promising separations between defaulted and repaid loans. We also make sure not to use any predictors capturing information that we would not have at the time of selecting which loans to invest in.

Summary of variables

Response:

Newly defined variable, paid, which was a binary variable indicating whether a loan had any status other than ‘Fully Paid’.

Predictors:

funded_amnt: The total amount committed to that loan at that point in time.home_ownership: The home ownership status provided by the borrower during registration or obtained from the credit report.int_rate: Interest Rate on the loan.purpose: A category provided by the borrower for the loan request.annual_inc: The self-reported annual income provided by the borrower during registration.verification_status: Indicates if income was verified by LC, not verified, or if the income source was verified.dti: Debt to income ratio - ratio calculated using the borrower’s total monthly debt payments on the total debt obligations, excluding mortgage and the requested LC loan, divided by the borrower’s self-reported monthly income.revol_util: Revolving utilization - ratio of total current balance to credit limit for all revolving accounts.grade: Lending club assigned loan grade.-

term: The number of payments on the loan. Values are in months and can be either 36 or 60.We use one hot encoding for the categorical variables (employment length, home ownership, verification status, grade and purpose).

We normalize all columns with values that are not percentages (funded amount, annual income and debt-to-income ratio).

We drop rows with null values in any of the columns.

Handling Imbalanced Class

The machine learning algorithms we select to predict future loans as defaulting or not defaulting have trouble learning to predict underrepresented classes. As seen in the table below, the defaulted class in the training data is under represented. To address this class imbalance problem, we use synthesis of new minority class instances (SMOTE). For each minority class, SMOTE calculates the k nearest neighbors. Depending on the amount of oversampling required, one or more of the k-nearest neighbors are selecte to create synthetic examples to augment the training dataset. We oversample the defaulted loan class to achieve a 50-50 split of classes using the imbalanced-learn library.

| number of loans | percent of loans | SMOTE number of loans | SMOTE percent of loans | |

|---|---|---|---|---|

| repaid | 289177 | 0.78 | 289177 | 0.5 |

| defaulted | 81266 | 0.22 | 289177 | 0.5 |

Machine Learning Models

Our goal is to achieve the highly accurate predictions of the probability of a loan defaulting with our selected features, and use this predicted probability as an input to our investment strategy. We use a suite of models to predict this probability and conduct hyperparameter searches using grid search. The models we explore are listed below with their tuned hyperparameters.

- Logistic Regression

- QDA

- Random Forest

- Max depth: 12

- Number of estimators: 50

- AdaBoost

- max_depth: None

- Number of estimators: 50

- learning_rate: 1.0

- Light GBM

- Learning_rate: 0.1

- boosting_type: gradient boosted decision tree

- max_depth: 15

- Neural Network: Multi-layer Perceptron

- Nodes by layer: 40, 20, 10

The baseline accuracy, i.e. accuracy achieved when the majority class (repaid loans) is always predicted, is 0.82 on the training set and 0.78 on the test set. While most of our models do not achieve baseline accuracy, we attribute this to discarding variables we identify as potentially containing information about protected classes.

As displayed in the table below, the highest test accuracy is achieved by the neural network, followed by QDA. However, QDA achieves a high test accuracy because it predominantly predicts that loans are repaid (only 0.07% of loans are predicted to default). A dive into QDA’s training accuracy reveals a similar pattern, where only 3.4% of the loans are predicted to default, despite 50% of the loans resulting in true defaults. Given this trend, we don’t trust that QDA has learnt the nuances of our training data, so we choose the next contender, logistic regression, to take forward to our return on investment analysis, along with the neural network.

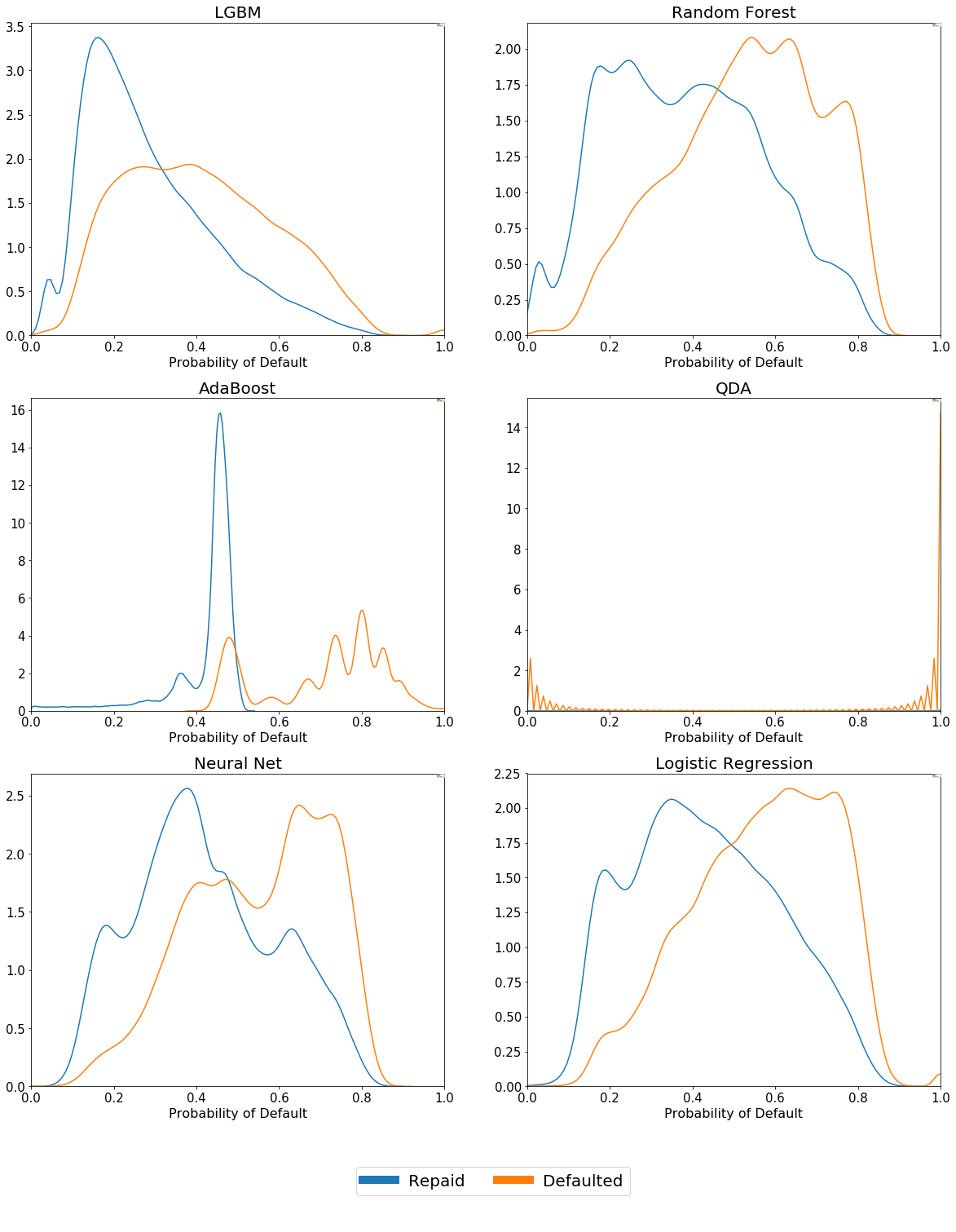

Heuristically, it mattered to us that our models be able to assign different spectrums of default probabilities to loans that went on to default compared with those that repaid succesfully. We considered the probability distributions associated with our two outcome classes in the training data - aware of the fact that we shouldn’t read too much into this since there was clearly the chance our models were overfit.

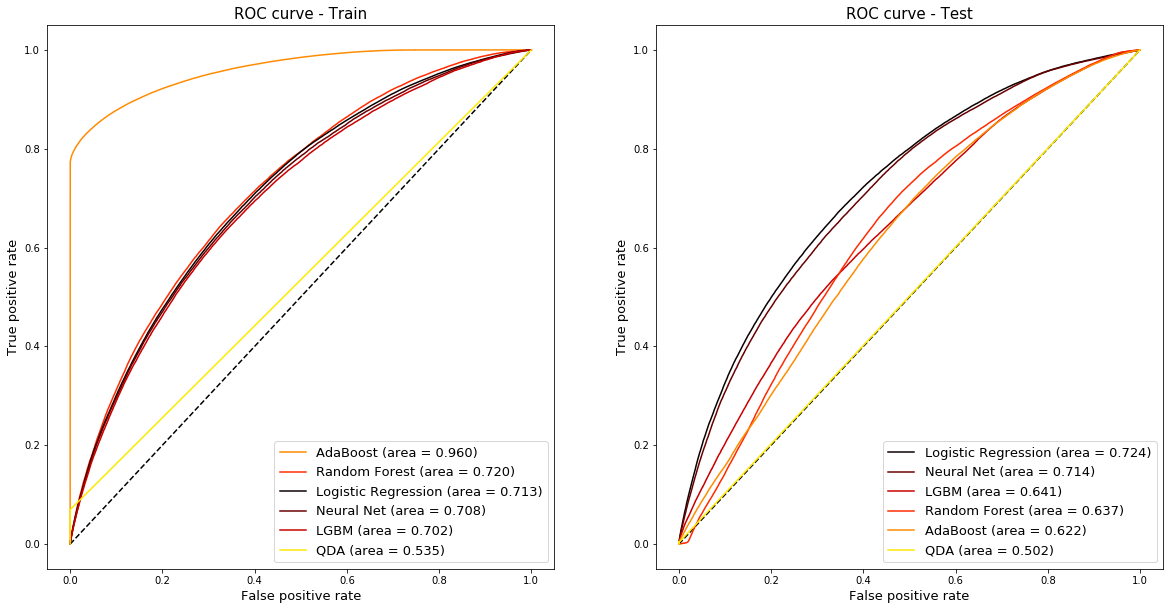

Financial data is notoriously noisy, so it was promising that our models were generally able to differentiate between the different outcomes based on our input data. We were interesed in quantifying the predictive power of our models, hence we used ROC AUC scores as well as accuracy scores to make our final decision on which models to take forwards. As this is a highly imbalanced problem, we seek to confirm that our best contendors from an accuracy standpoint do maximize the area under the ROC curve. As shown in the table, the highest AUC is achieved by the logistic regression model, followed by the neural network, confirming our selection.

Training and test accuracies by model

| Training Accuracy | Training AUC | Test Accuracy | Test AUC | |

|---|---|---|---|---|

| Logistic Regression | 0.655 | 0.713 | 0.746 | 0.724 |

| Neural Network | 0.676 | 0.708 | 0.781 | 0.714 |

| Light GBM | 0.786 | 0.702 | 0.702 | 0.641 |

| Random Forest | 0.694 | 0.720 | 0.718 | 0.637 |

| AdaBoost | 0.896 | 0.960 | 0.663 | 0.622 |

| Quadratic Discriminant Analysis | 0.535 | 0.535 | 0.778 | 0.502 |

Probability of Default Distributions for Training Data

Area under the receiver operating characteristic (ROC) curve for training and test data

Analysis

From these results, it was clear we should take the Logistic Regression model and our MLP forward as our final models. Financial data is notoriously noisy, so the fact that both these models achieved close to/superior accuracy scores compared with the the baseline accuracy, and that both maintained their AUC across the training and test sets was certainly promising as we looked to use these models to predict Return on Investment.